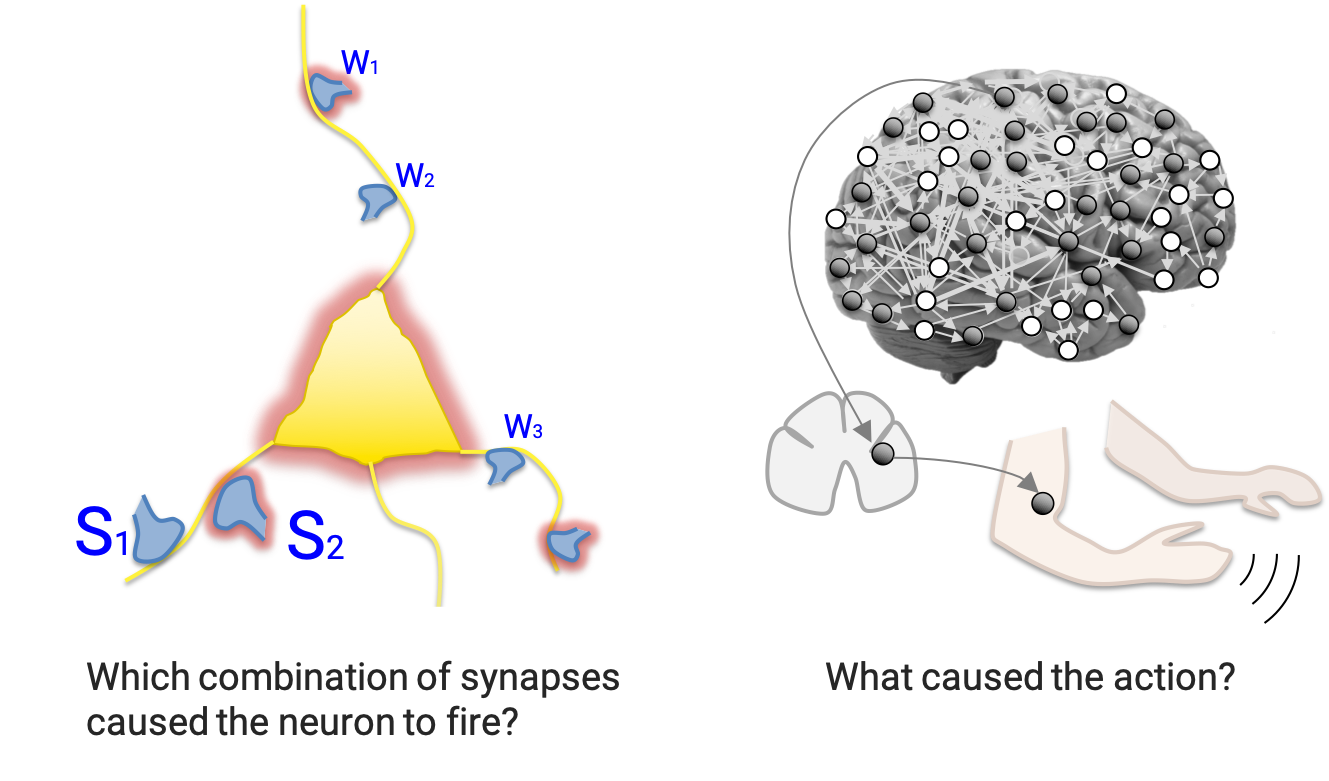

Actual causation is concerned with the question: “What caused what?” Consider a transition between two states within a system of interacting elements, such as an artificial neural network, or a biological brain circuit. Which combination of synapses caused the neuron to fire? Which image features caused the classifier to misinterpret the picture? Even detailed knowledge of the system’s causal network, its elements, their states, connectivity, and dynamics does not automatically provide a straightforward answer to the “what caused what?” question.

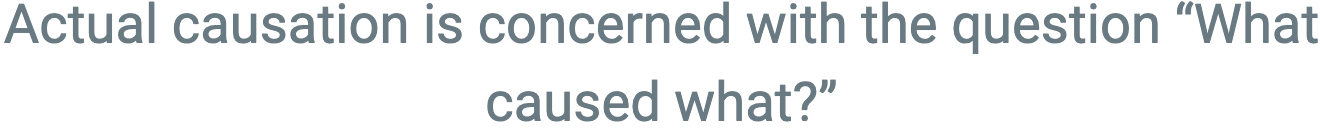

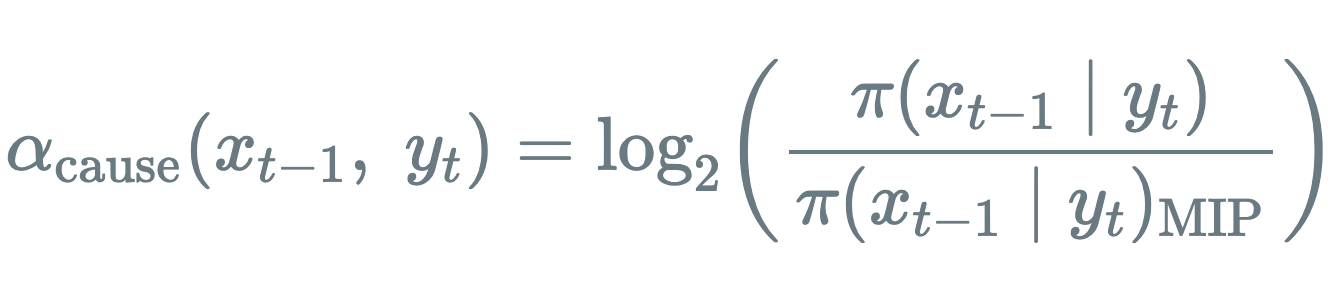

To address this question in a principled manner, we have developed a formal account of actual causation based on the five causal principles of integrated information theory (IIT)—namely, existence (here: realization), composition, information, integration, and exclusion. The formalism is generally applicable to discrete Markovian dynamical systems constituted of interacting elements and naturally extends from deterministic to probabilistic causal networks, and also from binary to multi-valued variables. Finally, it allows us to quantify the causal strength between an occurrence and its cause or effect in informational terms (Albantakis et al. 2019).

As shown in Juel et al. 2019, we can, moreover, use the Actual Causation framework to trace the causes of an action back in time (“causes of causes”) and evaluate the spatial and temporal extent to which internal mechanisms and states contributed to the actual causes of an agent’s actions, as opposed to being driven by its sensory inputs.

Click the link below for videos on the Actual Causation framework and its applications.

Artificial neural networks (ANNs), such as the classifier you’ll find at the link below, provide a rich test bed for theoretical developments on actual causation. This small interactive demo shows that our actual causation measure can be employed to evaluate which neurons in the network are most relevant for determining the final outcome, or the state of neurons in intermediate layers. The neural network has been trained on the Iris data set (where the input feature vector was first translated into a 10-bit string). Connection strengths are indicated by the thickness of the lines between neurons. Four example inputs can be tested. Black indicates state 1, white state 0. The continuous activation states of the intermediate layers are binarized around 0 (> 0 means 1) to facilitate the causal analysis. It is possible to select two layers using the buttons on the right in order to compute the associated causal account (“what caused what”). Alternatively, sets of neurons from two layers can be selected by clicking directly on the neurons to compute the strength () of their causal link. The inset on the bottom right shows the average involvement (summed causal strength) of each neuron in determining the output for a given class of inputs. To date, the full theoretical analysis can only be applied to rather small neural networks. Our goal is to utilize ANNs to test and develop practical approximations of the actual causation formalism.

References

Albantakis L, Marshall W, Hoel E, Tononi G (2019). What caused what? A quantitative account of actual causation using dynamical causal networks. Entropy, 21 (5), pp. 459.

Juel BE, Comolatti R, Tononi G, Albantakis L (2019). When is an action caused from within? Quantifying the causal chain leading to actions in simulated agents. arXiv preprint arXiv:1904.02995.

Grasso M, Albantakis L, Lang JP, Tononi G. “Causal reductionism and causal structures.” Nature neuroscience 24.10 (2021): 1348-1355.

Acknowledgements

The classifier demo was made possible by funding from a 2020 FQXi Mini Grant (FQXi-MGB-1810) and through the support of a grant from Templeton World Charity Foundation, Inc. (TWCF0196).